Research

From my undergraduate research in the Haptics Group of the GRASP Lab at Penn to my graduate research in the CHARM Lab at Stanford, I have been fortunate to work for two amazing advisors in Dr. Katherine J. Kuchenbecker and Dr. Allison M. Okamura, respectively, both focusing on haptics and medical robotics. Haptics refers to the integration of the sense of touch into technology interfaces to provide additional information or feedback to a user or robot. For a good overview of haptics and its potential applications I would recommend watching my advisors' TED talks: Allison's talk can be found here and Katherine's is here. For a brief teaser about my research, watch the 5-minute intro talk I gave for the Medical Education in the New Millenium course at the Stanford Medical School in October 2014, or watch my full thesis defense (talk starts about 10 minutes into the video) for a more details.

|

|

|

Graduate Research:

Haptic Jamming: Controllable Mechanical Properties in a

Shape-Changing User Interface

|

|

|

In many medical procedures, clinicians rely upon the sense of touch to make a diagnosis or treatment decision. Yet, current medical simulators are limited in the variety of physical sensations they can generate; virtual reality simulators are typically limited to interaction via a rigid instrument, and classic mannequins do not permit large changes in geometry and material properties. Encountered-type haptic displays allow the user to move freely in a large workspace without wearing or holding onto a haptic device. An end-effector capable of adjusting its physical properties would maximize the variety and realism of procedures than can be simulated. Many controllable tactile displays present the user with either variable mechanical properties or adjustable surface geometries, but controlling both simultaneously is challenging due to electromechanical complexity and the size/weight constraints of haptic applications.

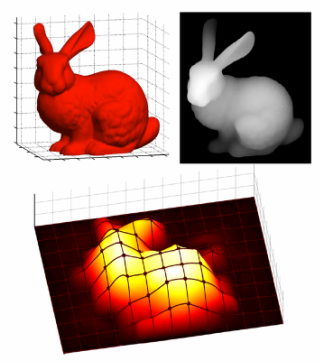

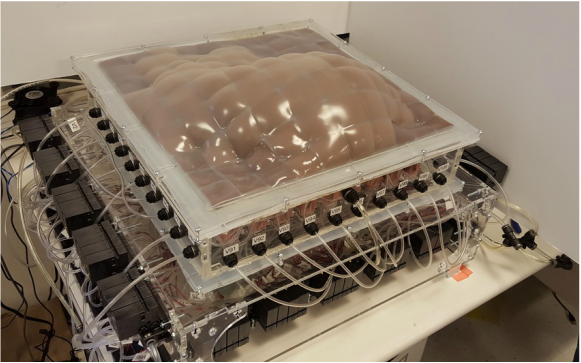

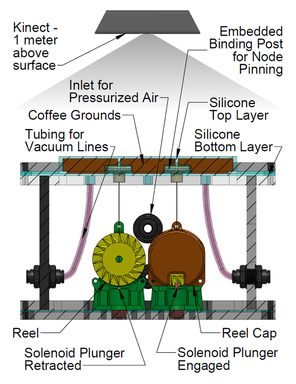

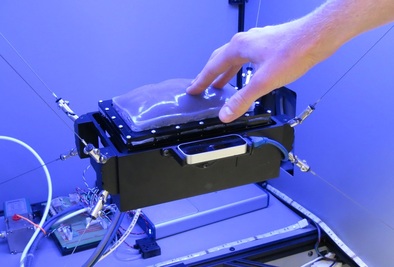

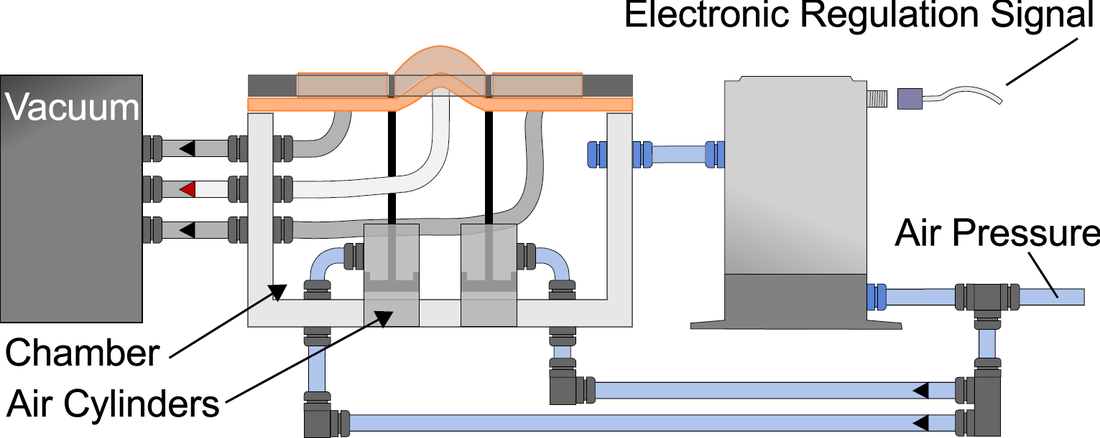

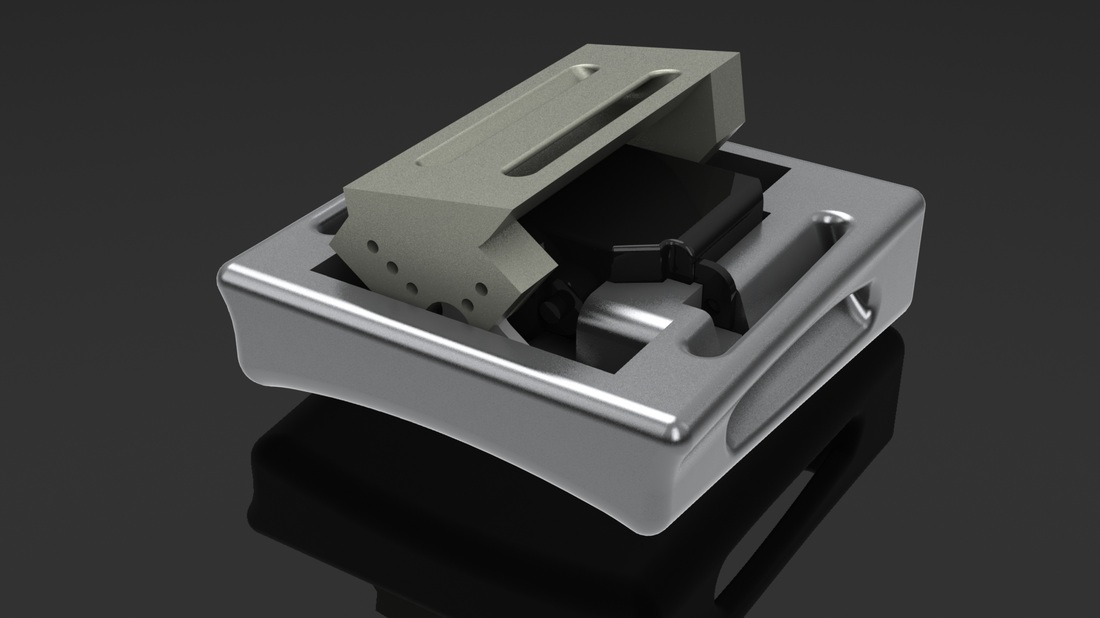

My graduate research first focuses on the development of a tactile display capable of simultaneously exhibiting variable geometries and adjustable mechanical properties (i.e. a surface that can both change shape and stiffness). Our proposed Haptic Jamming device, described in this paper we published at World Haptics Conference 2013, uses of a hollow layer of silicone rubber filled with coffee grounds connected to a vacuum line. Decreasing the pressure inside the silicone below ambient pressure (i.e., vacuuming) jams the granules together, stiffening the surface. The silicone layer is clamped over the top of a chamber with controllable internal air pressure. In the unjammed state, adjusting the internal pressure of the chamber causes the silicone to deform like a balloon, as illustrated below. Air cylinders can selectively pin down nodes between cells, as further explained below. Subsequently jamming a cell allows it to maintain its ballooned shape in a stiffened state even after the chamber pressure is released. Thus, the surface geometry of this individual cell can change independently from the mechanical properties of the surface.

My graduate research first focuses on the development of a tactile display capable of simultaneously exhibiting variable geometries and adjustable mechanical properties (i.e. a surface that can both change shape and stiffness). Our proposed Haptic Jamming device, described in this paper we published at World Haptics Conference 2013, uses of a hollow layer of silicone rubber filled with coffee grounds connected to a vacuum line. Decreasing the pressure inside the silicone below ambient pressure (i.e., vacuuming) jams the granules together, stiffening the surface. The silicone layer is clamped over the top of a chamber with controllable internal air pressure. In the unjammed state, adjusting the internal pressure of the chamber causes the silicone to deform like a balloon, as illustrated below. Air cylinders can selectively pin down nodes between cells, as further explained below. Subsequently jamming a cell allows it to maintain its ballooned shape in a stiffened state even after the chamber pressure is released. Thus, the surface geometry of this individual cell can change independently from the mechanical properties of the surface.

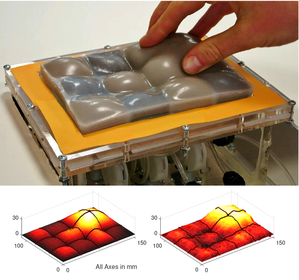

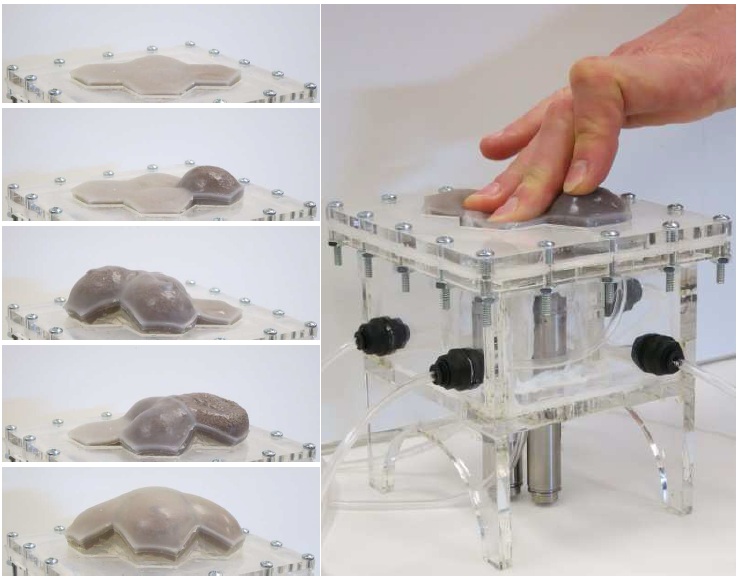

An array of multiple Haptic Jamming cells opens the door to display a variety of geometries beyond simple ellipsoids. Toward this end, we constructed a four-cell prototype to demonstrate the feasibility of combining multiple cells to display lumps of varying sizes and stiffnesses from a single surface array. In addition to the four hexagonal cells, two hex nuts are embedded in the silicone at each of the two internal nodes between the cells. The rods of two air cylinders are aligned directly below the two nodes and screw into these nuts. When the air cylinders are not pressurized, the rods move up and down freely with the motion of the silicone layer as it deforms. Pressurizing the top of an air cylinder forces the piston and rod downward, effectively pinning the node to stay level with the flat surface of the surrounding acrylic. Further development of this tactile display aims to increase the resolution and number of cells in the array while shrinking the overall profile of the device. Electronic valves and pressure regulators allow us to reconfigure the display in less than a second, as demonstrated in this video.

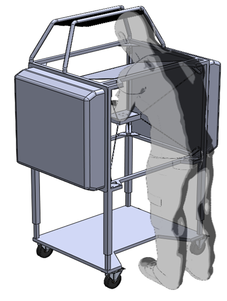

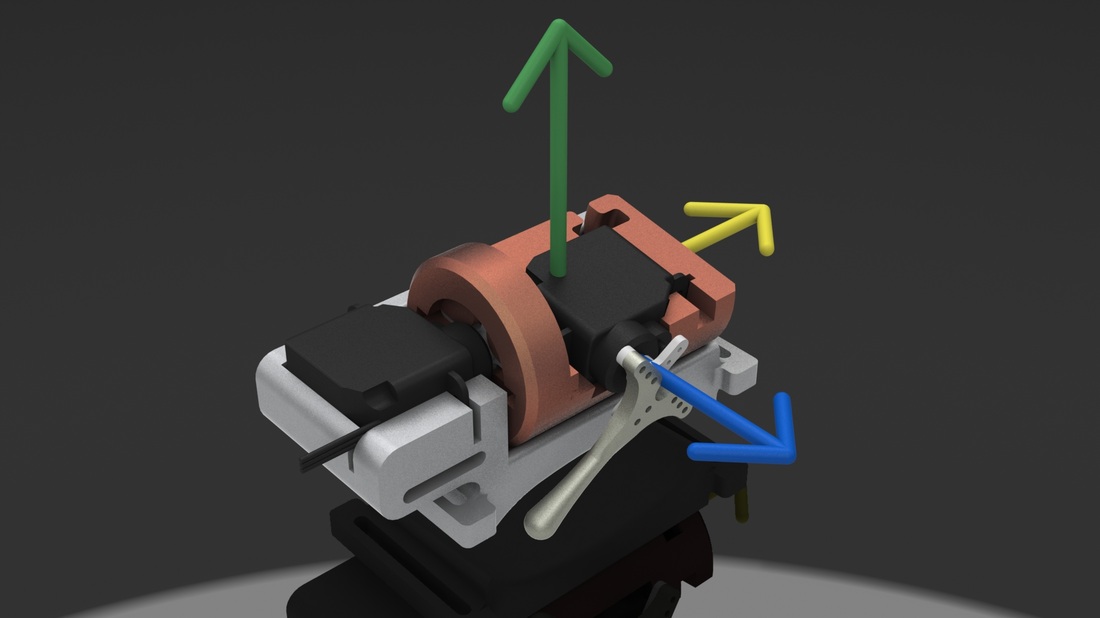

The design of the tactile display for medical simulation developed out of a collaboration with Intelligent Automation Inc. to place it on the end effector of the KineSys MedSim cable-driven robot shown below. Once the development of both the Haptic Jamming display and the robot are complete, the next research phase will involve integrating the two systems into a single encountered-type haptic display. The tactile display will provide the cutaneous feedback as the user interacts with it directly with his or her hand while the cable-driven platform will provide the underlying kinesthetic force feedback. In addition, as the user moves his or her hand around the workspace, the platform will track the motion to effectively magnify the workspace well beyond the size of the display itself while the tactile display adjusts to create a variable environment.

The design of the tactile display for medical simulation developed out of a collaboration with Intelligent Automation Inc. to place it on the end effector of the KineSys MedSim cable-driven robot shown below. Once the development of both the Haptic Jamming display and the robot are complete, the next research phase will involve integrating the two systems into a single encountered-type haptic display. The tactile display will provide the cutaneous feedback as the user interacts with it directly with his or her hand while the cable-driven platform will provide the underlying kinesthetic force feedback. In addition, as the user moves his or her hand around the workspace, the platform will track the motion to effectively magnify the workspace well beyond the size of the display itself while the tactile display adjusts to create a variable environment.

Robotic Needle Steering

While not yet implemented clinically, robotic needle steering shows great promise to reduce invasiveness, minimize patient trauma, and improve overall outcomes in an array of medical applications. Tip asymmetry, such as a bend, curve, or bevel, results in curved needle trajectories for flexible needles when inserted into tissue. The first advantage this provides over the standard straight needle approach is that some previously unreachable targets may be accessible with steerable needles if a vital organ or bone blocks the straight-line path to the target. Moreover, by rotating the needle, one can adjust the plane of curvature to link together a series of arcs into a more complex three dimensional trajectory, further increasing the reachable areas in the body that the needle tip can access. However, controlling a steerable needle to follow such trajectories is a difficult task, largely due to the challenge of tracking the orientation of the needle tip through multiple rotations and insertions. Limitations of medical imaging prevent the display of this information to the clinician. This has led many research groups to develop robotic needle insertion platforms, utilizing the capabilities of motor encoders to precisely track both the insertion distance and rotation of the needle throughout a procedure.

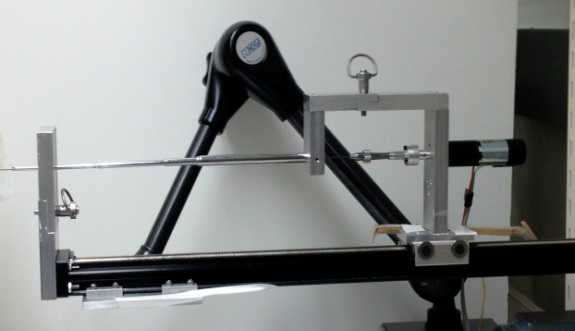

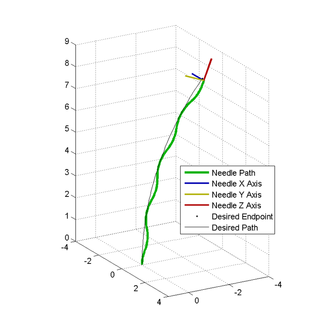

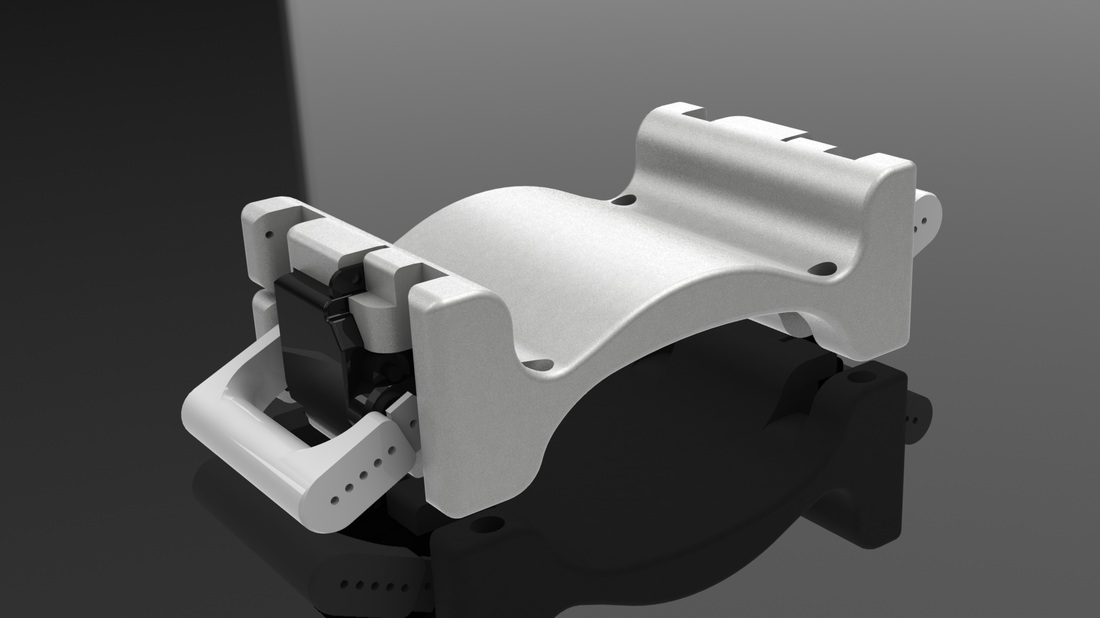

In my first year at Stanford, before joining the CHARM Lab full time, I completed a 6-month rotation in needle steering under the guidance of my lab mate Ann Majewicz. In the first half of the rotation, I designed and machined a set of quick-release attachments that allows the needle to more easily attach and detach from the robot without requiring any tools or screws. In the second half of the rotation I focused on developing new algorithms for controlling the path of the needle using precisely timed combinations of spinning the needle while inserting and flipping the needle between insertion segments. Controlling the steerable needle with a robot allows for even more complex needle trajectories through the body by relaxing the constraint that the needle tip will always follow arcs of constant curvature. Without this constraint, a more complex path planner can be implemented to generate three dimensional trajectories that cleanly navigate through even the most complicated of environments like the deep zones of the brain or the kidneys.

While not yet implemented clinically, robotic needle steering shows great promise to reduce invasiveness, minimize patient trauma, and improve overall outcomes in an array of medical applications. Tip asymmetry, such as a bend, curve, or bevel, results in curved needle trajectories for flexible needles when inserted into tissue. The first advantage this provides over the standard straight needle approach is that some previously unreachable targets may be accessible with steerable needles if a vital organ or bone blocks the straight-line path to the target. Moreover, by rotating the needle, one can adjust the plane of curvature to link together a series of arcs into a more complex three dimensional trajectory, further increasing the reachable areas in the body that the needle tip can access. However, controlling a steerable needle to follow such trajectories is a difficult task, largely due to the challenge of tracking the orientation of the needle tip through multiple rotations and insertions. Limitations of medical imaging prevent the display of this information to the clinician. This has led many research groups to develop robotic needle insertion platforms, utilizing the capabilities of motor encoders to precisely track both the insertion distance and rotation of the needle throughout a procedure.

In my first year at Stanford, before joining the CHARM Lab full time, I completed a 6-month rotation in needle steering under the guidance of my lab mate Ann Majewicz. In the first half of the rotation, I designed and machined a set of quick-release attachments that allows the needle to more easily attach and detach from the robot without requiring any tools or screws. In the second half of the rotation I focused on developing new algorithms for controlling the path of the needle using precisely timed combinations of spinning the needle while inserting and flipping the needle between insertion segments. Controlling the steerable needle with a robot allows for even more complex needle trajectories through the body by relaxing the constraint that the needle tip will always follow arcs of constant curvature. Without this constraint, a more complex path planner can be implemented to generate three dimensional trajectories that cleanly navigate through even the most complicated of environments like the deep zones of the brain or the kidneys.

Undergraduate Research

Tactile Feedback Methods for Motion Guidance

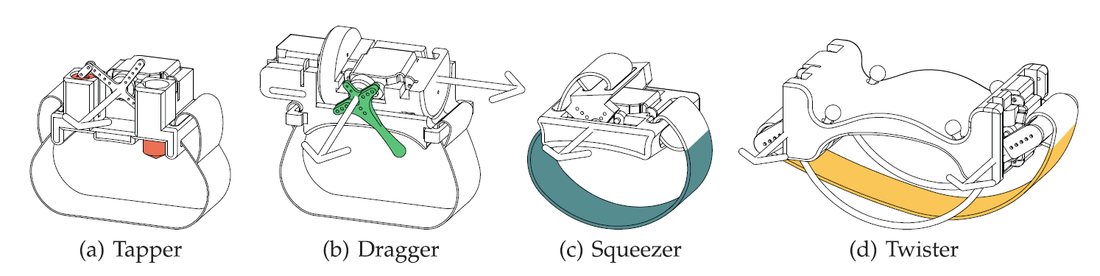

My undergraduate research focused on a sub-project of the larger StrokeSleeve project in the Penn Haptics Lab, which aimed to improved motor rehabilitation for stroke patients by providing tactile feedback to the patients arm as directional cues in motion guidance tasks. My research aimed to develop forms of tactile feedback that are more intuitive or natural than vibration motors typically used as haptic cues. Inspired by the concept of recreating tactile sensations commonly experienced in everyday life, I spent my first summer in the lab iteratively designing, prototyping, programming, and testing a set of servo-based tactile actuators capable of tapping, dragging across, squeezing, or twisting a user's wrist. Each device was capable of replaying tactile sensations recorded from actual human contact, as described in greater detail in our paper form World Haptics Conference 2011, my first academic publication, as well as the accompanying video:

|

|

| ||||||

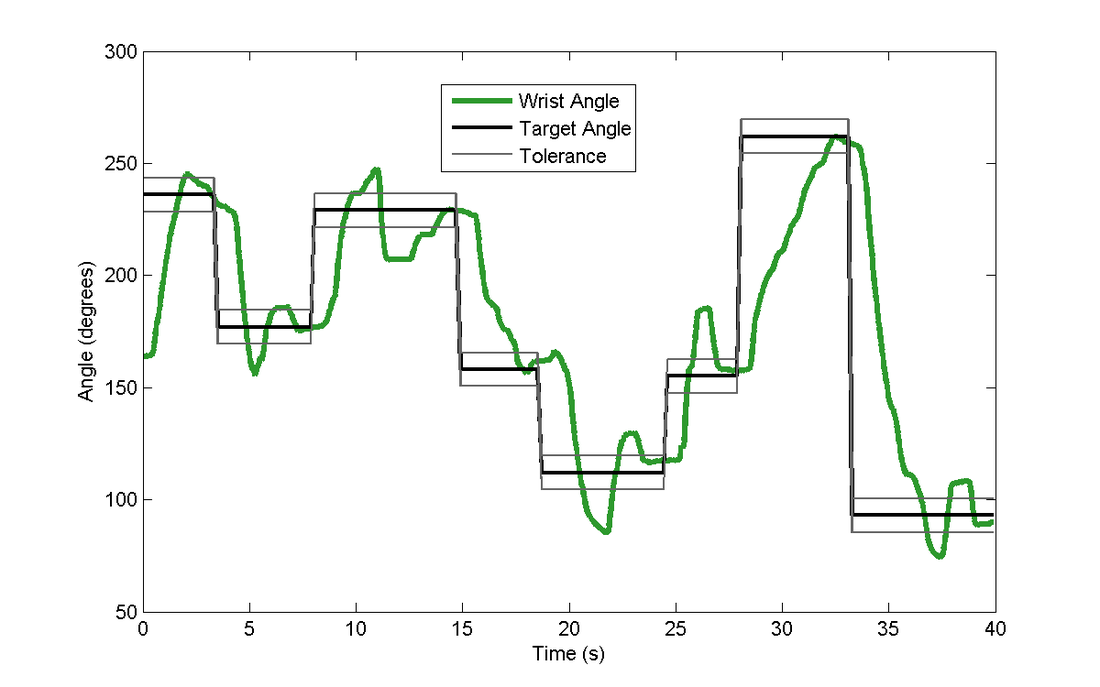

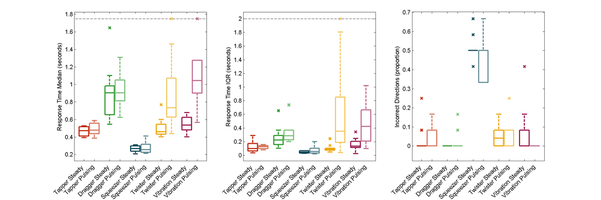

I continued this work with a user study testing the performance of each of these actuators against the standard of vibration as cues for motion guidance in wrist rotation tasks. These tasks ranged from simply responding to the direction of the cue to targeting a single wrist angular position, to tracking a rotational trajectory. We describe the experiments and the results in this Transactions on Haptics paper, my first journal article.

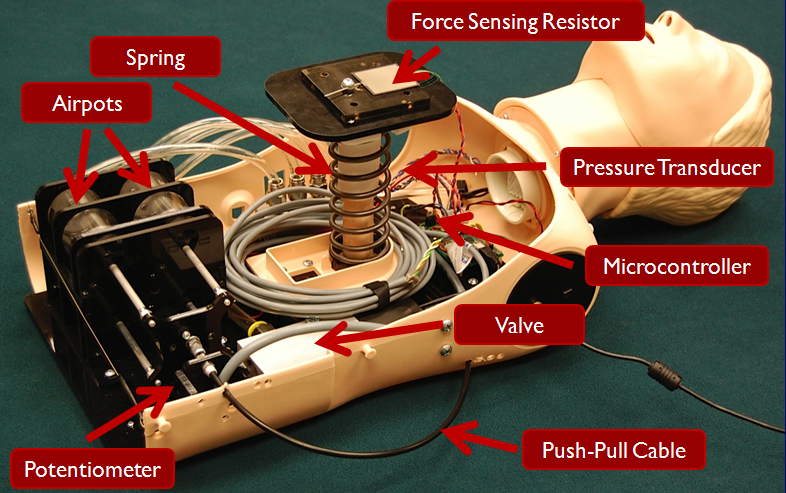

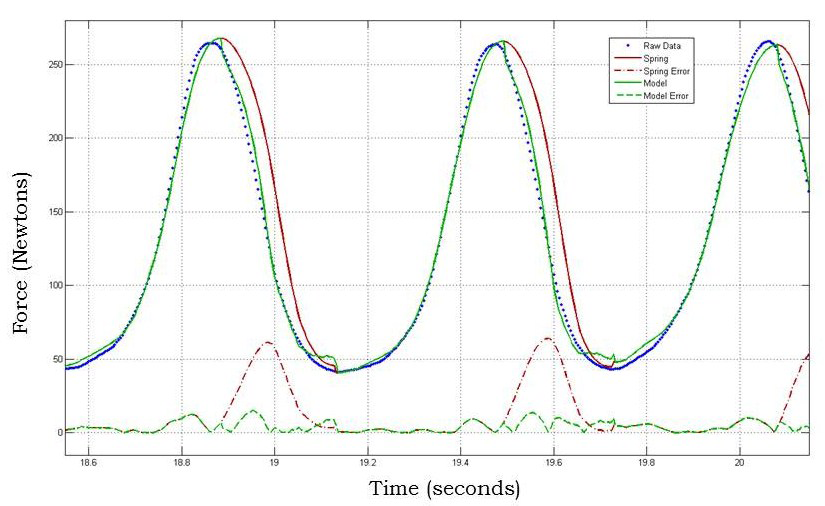

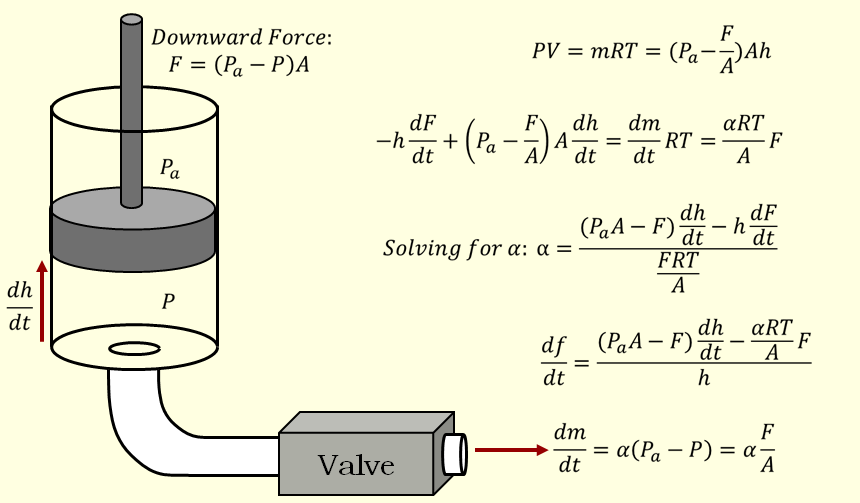

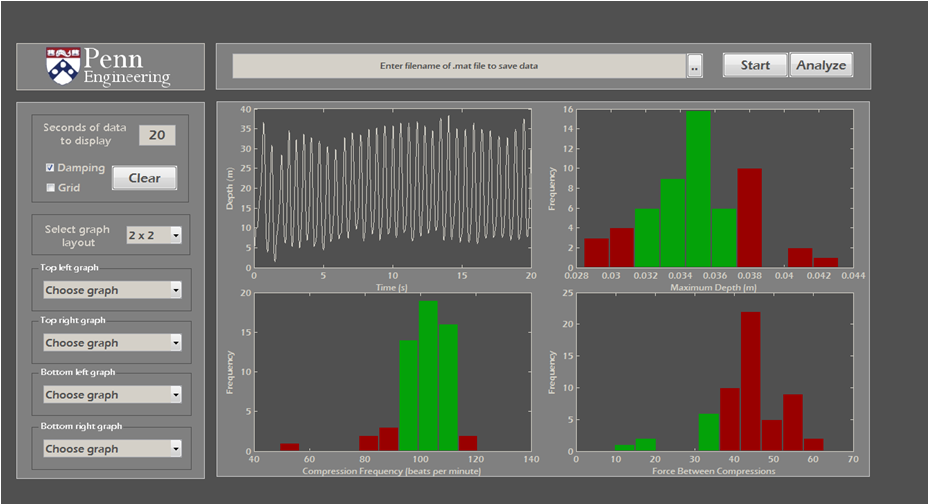

Biofidelic Manikin with Embedded Sensors for CPR Training

Along with one of my teammates, I continued to work on my senior design project during the summer following our graduation. This project involved building a manikin for CPR training that more accurately mimicked the force-deflection characteristics of the human chest using programmable dampers while providing additional feedback to the user from embedded sensors for improved training. We spent the summer redesigning a more robust version of the manikin and running a user study with clinicians in Philadelphia, resulting in a conference paper that we published at Haptics Symposium 2012. In addition to this paper, you can read more about this project on the Penn Haptics website or see our senior design poster on my Projects page.